Categories

Biometric technologies—fingerprint scanners, facial recognition, and voiceprints—have become integral to modern life, promising enhanced security and convenience. However, a growing concern known as function creep threatens to undermine these benefits. Function creep occurs when biometric data, collected for a specific purpose, is repurposed for unrelated uses without transparency or consent.

Given the immutable nature of biometric data, such as your palm vein pattern or facial geometry, this expansion raises significant ethical, privacy, and security concerns. This article explores the mechanics of the function creep, why it’s a problem, and examines four real-world cases that illustrate its impact.

Function creep in biometrics refers to the gradual expansion of biometric data usage beyond its original purpose, often without user consent or transparency. For example, a fingerprint collected for workplace attendance might later be used for unrelated purposes like credit scoring or law enforcement surveillance.

Function creeping often starts innocently, driven by the desire to maximize the utility of existing data. Organizations may repurpose biometric databases to save costs or meet new demands, but this incremental expansion can erode user trust and autonomy. The permanence of biometric data amplifies the stakes—unlike a password, you can’t change your face or fingerprints if they’re compromised. Understanding this phenomenon is critical to addressing its risks.

The unauthorized expansion of biometric data use poses multiple risks, from individual privacy violations to broader societal harms. Below, we outline the primary concerns, combining narrative insights with structured points to highlight the stakes.

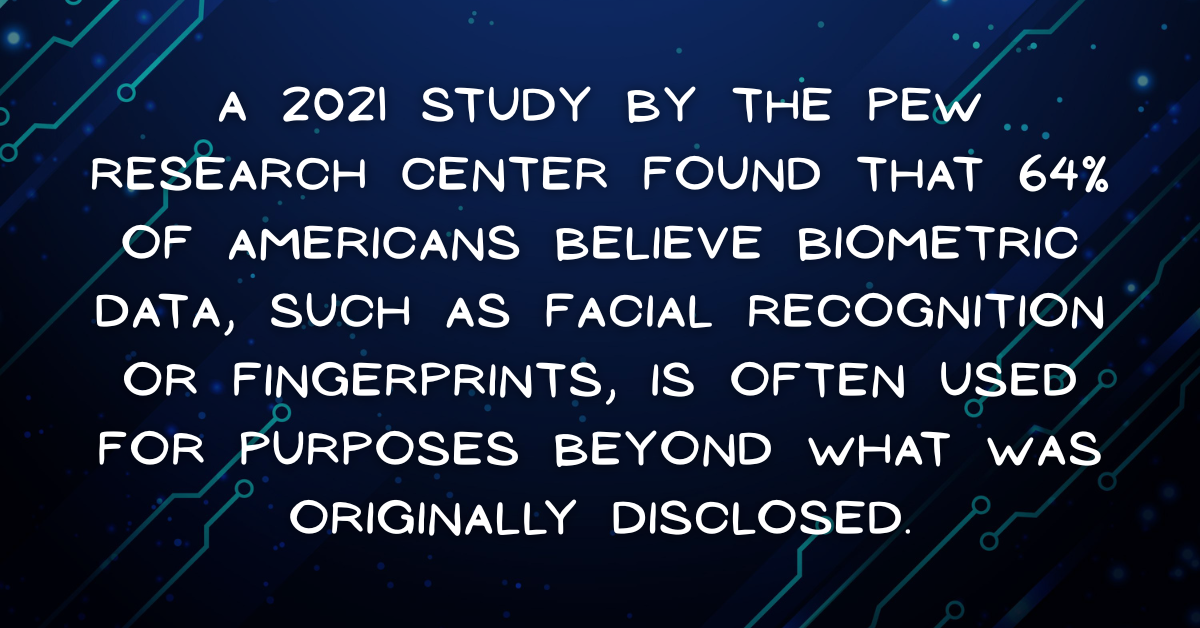

When biometric data is used beyond its original purpose, individuals lose control over their personal information. For example, a facial scan collected for a seemingly benign purpose, like unlocking a device, might later be shared with third parties for profiling or surveillance. This loss of agency undermines trust in institutions and technology providers.

Repurposing biometric data increases the value of databases as targets for cyberattacks. A breach in a multi-purpose biometric system could expose sensitive information across contexts—financial, governmental, or personal—leading to identity theft or worse.

Function creeping often bypasses ethical principles like informed consent and proportionality. Using biometric data for unanticipated purposes, such as targeting ads or tracking individuals, can exploit vulnerable populations, particularly in regions with weak regulatory oversight.

Many countries lack comprehensive laws governing biometric data, allowing function creeping to flourish. Without clear rules, organizations face little accountability for repurposing data, leaving users vulnerable.

Key concerns include:

To illustrate the tangible impacts of function creep, we examine four documented cases where biometric systems were extended beyond their original scope, highlighting the consequences and responses.

Clearview AI developed a facial recognition database by scraping billions of images from social media platforms, initially intended for limited security applications.

The company shared this database with law enforcement agencies worldwide to identify individuals in criminal investigations, far beyond the original context of collection.

This unauthorized expansion sparked widespread criticism for violating privacy, as social media users were unaware their images were being used for surveillance.

Clearview AI faced legal challenges, including fines in multiple jurisdictions for “illegal” data practices, prompting calls for stricter facial recognition regulations.

India’s Aadhaar program, the world’s largest biometric ID system, collects fingerprints and iris scans to provide a unique ID for accessing government services like welfare distribution.

Private companies and government agencies have accessed Aadhaar data for unrelated purposes, such as verifying customers for banking or telecom services, often without clear consent.

Alleged data leaks and unauthorized access raised concerns about privacy and security, with critics warning of surveillance and exploitation risks in this massive database.

Legal challenges and public advocacy groups have pushed for stronger data protection laws in India, though regulatory gaps persist.

In 2021, 7-Eleven stores in Australia implemented facial recognition to monitor customers for security and loss prevention.

The collected biometric data was also used to build demographic profiles for marketing purposes without customer consent.

This revelation led to public outcry and regulatory scrutiny, as customers were unaware their biometric data was being repurposed.

Australia’s privacy regulator investigated, and 7-Eleven faced pressure to revise its data practices, highlighting the need for transparency in retail surveillance.

Google collects biometric identifiers, such as voiceprints and facial geometry, through products like Google Photos and Google Assistant for user authentication and functionality.

In 2025, Texas Attorney General Ken Paxton accused Google of using this data to enhance AI models and profile users beyond the initial scope, without adequate consent.

These allegations raised concerns about function creep in consumer tech, as users were unaware their biometric data was being repurposed for AI training or advertising.

The lawsuit prompted calls for clearer data usage policies, though global standards for biometric data in tech remain inconsistent.

Addressing function creeping requires proactive measures to balance innovation with ethical responsibility. Below, we outline strategies to curb its risks, blending narrative and actionable steps.

Governments must enact clear laws limiting biometric data use, mandating transparency, and enforcing penalties. The EU’s GDPR offers a model, though more specific biometric regulations are needed to address function creeping.

Organizations should implement robust consent mechanisms, ensuring users are informed about data uses and can opt out. User-friendly interfaces that explain data purposes before collection can empower individuals to make informed choices.

To prevent unauthorized repurposing, organizations can adopt:

Educating users about function creep empowers them to demand accountability. Public campaigns and media coverage can highlight risks and encourage scrutiny of biometric data practices.

These solutions face challenges, including resistance from organizations prioritizing efficiency and the slow pace of global regulatory alignment. However, they are essential to protect users in an era of expanding biometric use.

A function creep in biometrics is a pressing issue that threatens privacy, security, and trust in a world increasingly reliant on these technologies. The cases of Clearview AI, Aadhaar, 7-Eleven, and Google illustrate how biometric data, collected for specific purposes, can be repurposed in ways that erode individual autonomy and expose users to risks. By strengthening regulations, enhancing consent, implementing technical safeguards, and raising awareness, we can mitigate these dangers. As biometric systems continue to evolve, staying vigilant ensures that their benefits—security and convenience—do not come at the cost of our fundamental rights.